Ep 203 | Nate Soares

If Anyone Builds It, Everyone Dies: How Artificial Superintelligence Might Wipe Out Our Entire Species

Description

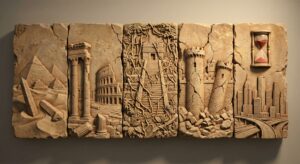

Technological development has always been a double-edged sword for humanity: the printing press increased the spread of misinformation, cars disrupted the fabric of our cities, and social media has made us increasingly polarized and lonely. But it has not been since the invention of the nuclear bomb that technology has presented such a severe existential risk to humanity – until now, with the possibility of Artificial Super Intelligence (ASI) on the horizon. Were ASI to come to fruition, it would be so powerful that it would outcompete human beings in everything – from scientific discovery to strategic warfare. What might happen to our species if we reach this point of singularity, and how can we steer away from the worst outcomes?

In this episode, Nate is joined by Nate Soares, an AI safety researcher and co-author of the book If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All. Together, they discuss many aspects of AI and ASI, including the dangerous unpredictability of continued ASI development, the “alignment problem,” and the newest safety studies uncovering increasingly deceptive AI behavior. Soares also explores the need for global cooperation and oversight in AI development and the importance of public awareness and political action in addressing these existential risks.

How does ASI present an entirely different level of risk than the conventional artificial intelligence models that the public has already become accustomed to? Why do the leaders of the AI industry persist in their pursuits, despite acknowledging the extinction-level risks presented by continued ASI development? And will we be able to join together to create global guardrails against this shared threat, taking one small step toward a better future for humanity?

About Nate Soares

Nate Soares is the President of the Machine Intelligence Research Institute (MIRI), and plays a central role in setting MIRI’s vision and strategy. Soares has been working in the field for over a decade, and is the author of a large body of technical and semi-technical writing on AI alignment, including foundational work on value learning, decision theory, and power-seeking incentives in smarter-than-human AIs. Prior to MIRI, Soares worked as an engineer at Google and Microsoft, as a research associate at the National Institute of Standards and Technology, and as a contractor for the US Department of Defense.

In French, we have a motto that says that a simple drawing is often better than a long explanation. Jean-Marc Jancovici Carbone 4 President

That’s very understandable because with left atmosphere thinking, one of the problems is that you see everything as a series of problems that must have solutions. Iain McGilchrist Neuroscientist and Philosopher

We can’t have hundreds and hundreds of real relationships that are healthy because that requires time and effort and full attention and awareness of being in real relationship and conversation with the other human. Nate Hagens Director of ISEOF

This is the crux of the whole problem. Individual parts of nature are more valuable than the biocomplexity of nature. Thomas Crowther Founder Restor

Show Notes & Links to Learn More

Download transcriptThe TGS team puts together these brief references and show notes for the learning and convenience of our listeners. However, most of the points made in episodes hold more nuance than one link can address, and we encourage you to dig deeper into any of these topics and come to your own informed conclusions.

00:00 – Nate Soares, LinkedIn, Machine Intelligence Research Institute (MIRI), MIRI Technical Governance Team

04:43 – Artificial Superintelligence (ASI) vs AI chatbots (LLMs)

05:11 – Large AI companies working toward ASI: OpenAI, Meta, Microsoft, Anthropic

06:23 – Habitable zone for humans on Earth is narrow (Paper)

08:18 – On intelligence, Our brains are constantly doing tasks of prediction (Implicit anticipation)

10:47 – The madness of crowds (Crowd psychology, Groupthink), Garry Kasparov vs. the world

13:15 – Stockfish

15:40 – Deep Blue vs. Garry Kasparov

16:38 – Leo Szilard on realizing the possibility of a nuclear chain reaction

17:38 – “MechaHitler” incident with xAI’s Grok chatbot

17:45 – Some LLMs emulate our delusion, self-deception, and overconfidence, AI-induced psychosis

18:04 – Cognitive atrophy and AI use

19:24 – LLMs are majority of what AI / tech companies are developing right now

22:02 – Training an LLM

24:17 – Deaths linked to chatbots

28:41 – The AI Alignment problem

34:59 – Fertility rate by country, 2100 global population projections

37:48 – Eating junk food and what it does in the brain with dopamine

39:18 – Eliezer Yudkowsky

41:28 – Heads of AI labs publicly warning that these models might cause human extinction

41:53 – Prisoner’s dilemma (Reality Blind example, Video example)

42:11 – Elon Musk decided he would “rather be a participant than a bystander”

44:06 – Stuxnet, U.S. recent kinetic strikes against Iran

46:34 – AI “sentience” incident in 2022

47:13 – Shutdown resistance in reasoning models

48:19 – Some AIs have begun to realize when they’re in a test

50:28 – 2001: A Space Odyssey (HAL 9000), 2010: Odyssey Two, The Foundation Trilogy

51:48 – Three Laws of Robotics

54:33 – AI is self-aware that it needs electricity/energy to continue running

1:00:18 – Energy and AI, Humans run on as much electricity as a lightbulb

1:01:52 – AIs can talk to one another already

1:02:23 – AI-induced psychosis

1:03:53 – Economic Superorganism

1:08:59 – Spandrel

1:11:14 – Geoffrey Hinton “Godfather of AI,” Hinton warnings about ASI

1:11:23 – Yoshua Bengio, Bengio warning about dangerous AI

1:11:38 – Elon Musk: 10-20 percent chance AI “goes bad”

1:11:42 – Dario Amodei of Anthropic: “25 percent chance things [with AI] go really, really badly”

1:11:56 – Sam Altman: “2 percent chance” AI will cause human extinction

1:16:55 – Oracle 500 percent debt-to-equity ratio despite good revenue

1:17:52 – Dot-com bubble

1:18:49 – 58* percent of people think the current AI development is not well regulated (as of 2024), 50 percent say they’re more concerned than excited about the increased use of AI (As of 2025)

1:20:41 – U.K.’s AI Security Institute

1:20:50 – Bipartisan Senate bill for congressional regulation of AI

1:21:13 – U.S. restrictions on AI computer chip sales to other nations

1:22:21 – Draft of Global AI Treaty

1:22:43 – Changing computer chip manufacturing industry

1:30:23 – OpenAI founding emails regarding control over AGI

1:31:42 – Online resources for If Anyone Builds It, Everyone Dies

1:32:02 – AI Future’s Project, AI 2027

1:33:03 – Marie Curie died of cancer, Isaac Newton poisoned himself with mercury